How does ZFS save my bacon and lets me sleep comfortably?

Deyan Kostov, a QA engineer at Bianor, shares his experience in building a convenient and safe home setup that makes his remote work painless and data protection ensured.

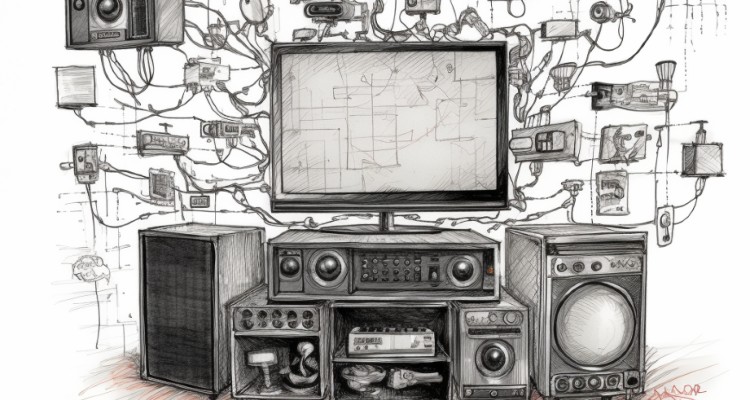

An IT home office

Working from home is becoming accepted widely in the private IT business, and has been the norm in Free/Open Source Software since forever. If the Linux kernel (30 million lines of code) can be developed mostly from home or by distributed teams via email and on-line conferences, most software products can do the same! People have come up with various “home data center” solutions for their specific requirements. I have these needs to test a customer’s product – a Virtual Machine (VM) appliance with Web interface:

1. Two testing computers to cover the five supported browser/OS combinations (Chrome, Edge, and Firefox on Windows plus Chrome and Safari on Mac OS X).

2. A virtualization host to run the product. Each instance of the VM takes at least two CPU cores or threads, 8GB RAM, and 40GB disk space. SSD storage is preferable, because faster queries, restarts, restores, etc. will make me wait less and test more. About 4-5 VMs are needed on-line, typically.

3. Storage for images of the VMs of current and old releases, customer data (has to be encrypted!), demo databases for testing, and all kinds of files accumulated during the process. Each day I download the new build in images of 4GB, but most of the time, we work on two releases, so 8GB. Storing old ones is for reproducing old bugs or working on customer issues with early versions of the product. So yes, oodles of space are needed. Again, SSD preferred. It’s 2020 dude, enough with the spinning rust!

Home users generally follow these two approaches:

– Use second-hand hardware, discarded from data centers. They refresh their equipment frequently, because electricity consumption and space utilization are more vital to them than to home users, and it pays to jump on the new stuff quickly.

– Use commodity hardware from the stores. Interestingly enough, the largest operators of data centers, like Google and Facebook, also use commodity hardware. They buy the cheapest stuff they can find and have figured out that, in the long run, data redundancy is less expensive than “enterprise” hardware that is certified to work 99.99% of the time. The “always-on” equipment does not protect you from software bugs, user mistakes, malicious attackers, natural disasters, etc. With so many risks to your data, redundancy is always better. In fact, computers barely work!

“Computers Barely Work”

“Computers Barely Work” is becoming a meme recently. But when you think about it, HDDs are direct descendants of the first phonographs from the early 19th century, through vinyl records, then magnetic tapes, disks… They all have a rotating medium and a head moving across tracks to read/write a signal. It’s just that the rotation now is very-very fast, and the density of tracks is reaching some physical limits for reading. The latest generation HDDs are blasting microwaves (MAMR) or heat (HAMR) on the media to allow more data to be written in the same space. The head is so close to the spinning surface, it’s like a plane flying mere centimeters above the ground! A tiny shaking or vibration can make it crash.

A single bit-flip

Naturally, HDDs experience all kinds of damage to the written data. A single bit of flip-in code can break everything, or even worse, it can silently provide bad data to the user. How do most disks protect us from it? CRC checksums. They protect against a single bit flip, but not two or more. The only way you will notice such damage is when your code is broken or your videos glitch, music interrupts, or your favorite picture of your cat is half smudged. No bueno!

Just “for science,” I flipped 3 bits in the banner image of bianor.com. I opened the JPEG file in my favorite raw text editor (nano, since you asked) and changed a letter from C to D. That is 3 bit different, from 011 to 100. Judge the results yourselves – that tiny change made 3 ugly stripes in the right part of the image, with off colors and some junk added.

That’s why the new generation of file systems is doing hash checks on the blocks of data, and if there is some data redundancy, like RAID, the data is automatically repaired from the correct copy. Neat! ZFS is the oldest, most stable, and most feature-rich of them all. There are also AFS in Apple OS X and btrfs in Linux, and some others, but they lack features and a long proven record of stability. Btrfs has a history of instability with RAID-5! As a whole, the other projects have smaller developer communities, which means that they will continue to lag behind.

Enough intro, how did I solve my needs for testing?