Seamless Video Stream Frames-Metadata Synchronization

Resolving the considerable delay in the synchronization of the video and metadata streams in STANAG 4609.

The Concern

Video Stream – Metadata Synchronization Delay

During our years of experience in video streaming solutions development, especially in the defense industry sector, we have encountered a significant problem regarding the synchronization of per-frame metadata and the media. We call it the Secure Information Sharing Challenge. This obstacle pushes the users to develop methods ensuring the veracity of streaming data throughout the data lifecycle, from the point(s) of collection or creation to the point(s) of consumption. The considerable delay in the synchronization (at least 20 milliseconds and more) of the video stream and metadata stream in STANAG 4609 deteriorates the reliability of the data transfer. It introduces errors in target tracking and precision weapon guidance. The higher the speed of these processes, the higher the penalty for this error, jeopardizing Near-Real-Time (NRT) effect.

Solution Overview

A Single Data Stream Pairing Video and Metadata Streams

At Bianor, we are developing a solution addressing the Secure Information Sharing Challenge. This solution presents a new method for synchronizing the metadata and the video stream. Instead of having two separate streams (video and metadata). We create a new data stream that embraces both. The new stream is generated by a key implemented in a hardware module (HW) in the video camera source point and of a software module (SW) for embedding the coordinates (or other metadata) in the frame. The selection of the frame “start point” and the embedding of the coordinates in the frame are synchronized by the time of coordinates (or some other data) delivery. This way, the delay between data delivery and its actual appearance stays minimal or constant. The comparison of the synchronization of the metadata stream of STANAG 4609 with the metadata appearance in the frames of the new data stream demonstrates Bianor’s solution advantages.

Technical explanation

Overview

The reason for the delay in the synchronization is the fact that the video and metadata streams are independent in STANAG 4609 and the related family of STANAGs for transport organization of the transfer of reconnaissance imagery and associated auxiliary data between reconnaissance Collection Systems and Exploitation Systems.

The new solution for Seamless Video Stream Frames-Metadata Synchronization (SVSMS) consists of an HW module with the related firmware, providing the data synchronization and sending the new unencoded stream to its file-based representation. The firmware (Parameter Placer) of this HW module adds the coordinates (in this proposal) inside the image. HW requirements are implemented on an FPGA using VHDL without the encoder. The firmware providing the functionalities for placing data in the frames of the new stream is implemented in C/C++.

Solution

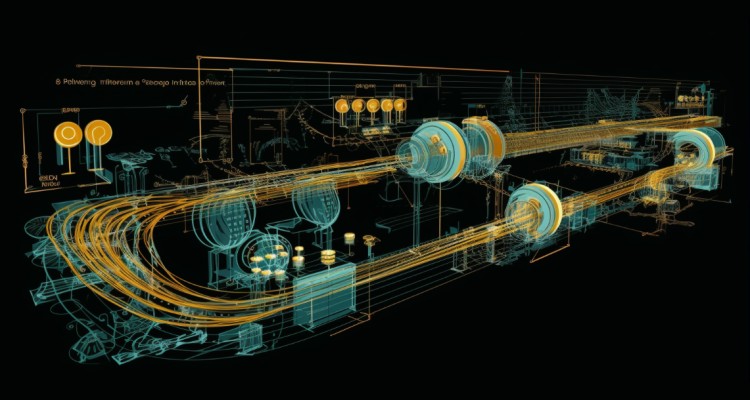

Figure 1: Synchronization of the Metadata (MD) with the Frames in a STANAG 4609 Video Stream

Figure 1 shows the synchronization of the metadata and frames after the STANAG 4609 encapsulation and synchronization. The number of Metadata packages M could be an arbitrary number relative to frames – starting from a single metadata for a couple of frames up to a couple of metadata for a single frame. Therefore, the delay of metadata relative to the video frame is almost always negative – video frames are coming “faster” than metadata.

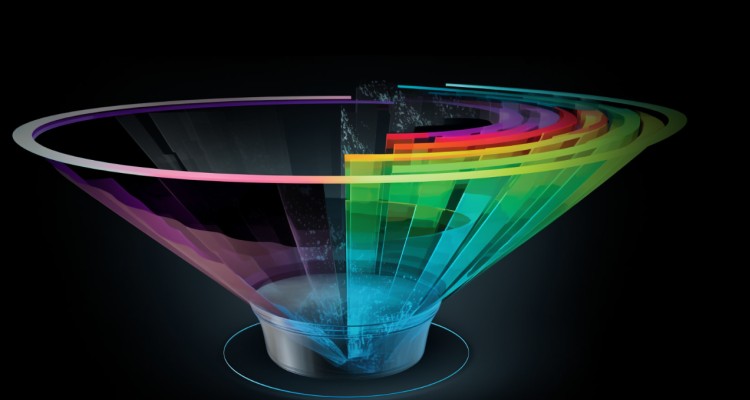

Figure 2: Seamless Video Stream Frames Metadata Synchronization (SVSFMS)

Figure 2 shows a new stream metadata synchronization. HW key captures the incoming parameter for synchronization and produces a signal “start video” to the camera sensor. While the camera sensor signals are created and an image is accepted, the Parameter Placer (PP) receives the incoming parameter data. It puts it as pixels on the image at the end of the frame completion. The end of this process releases the HW key, issuing the signal “complete image.”

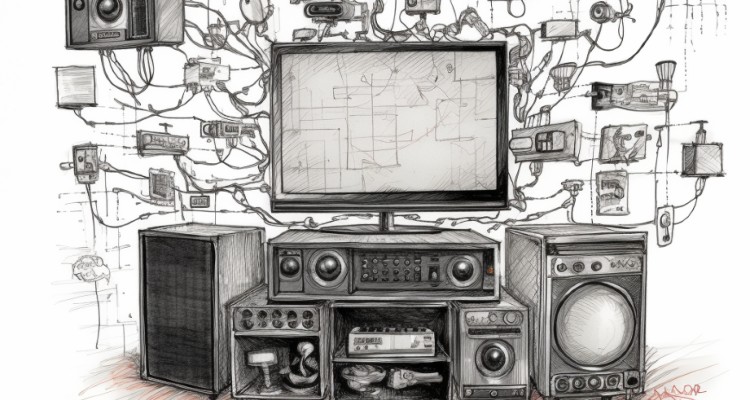

Figure 3: Block diagram of the new stream produce

Figure 3 shows the HW devices and SW under the design of this proposal. The parameters of the GPS module are used as input. The HW key is implemented on the FPGA module and the Parameter Placer. The main video stream is collected by a CPU and stored in the MC (Memory Card) storage. The connection between the CPU and SW on the PC is implemented using a USB connection. The PC application (Visualizer) shows the incoming unencrypted string – a structural and visual representation of the new stream.

Another PC application (Analyzer) will show metadata desynchronization between PID and images in STANAG 4609, demonstrating the improvement achieved by the new stream.

Conclusion

The SVSMS is the first of its kind. Furthermore, it is a strong competitor of the existing methods, especially in the interoperability domain of the STANAGs application. The advantage of the shorter synchronization delay is applicable both in the civil and security sectors. Media streaming, forensics, and Defense are the main target markets. Civil sector autonomous or semi-autonomous security systems requiring fast reactions can benefit from the solution. In the Defense, it can play a crucial role in target tracking and precision weapon guidance. As we conclude this exploration of our innovative synchronization paradigm, we invite you to envision a future where video streams and metadata unite seamlessly, fostering real-time accuracy and reliability across a spectrum of applications.

Video Streaming Lifecycle

Download Bianor’s white paper to learn more about the five most crucial components of video streaming lifecycle.