Content Ingestion in Video Streaming: Navigating the Challenges

What is ingestion in video streaming, and why is it essential for an OTT platform?

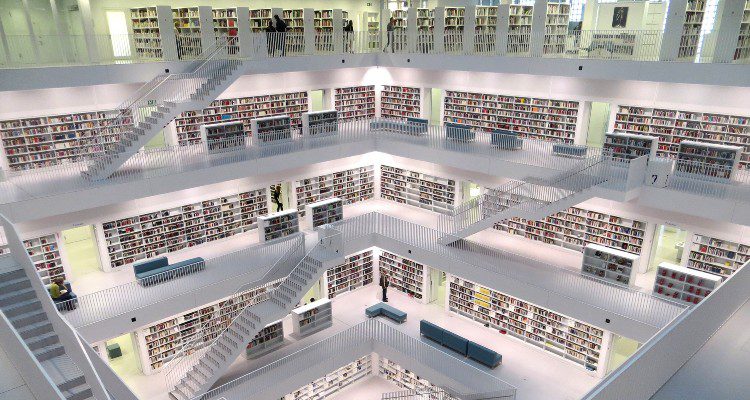

Ingestion, while less widely recognized than encoding or transcoding, is a cornerstone of the media management process. Ingestion ensures that every piece of media data finds its correct place in the vast digital library, ready for seamless delivery when called upon.

Context and Importance

In the early days of digital media, the volume of data was relatively manageable, and manual ingestion processes were feasible. Picture a small concert with a few dozen attendees; the doorkeeper could easily handle ticket checking and seat allocation. However, as media evolved, growing in scale and diversity, the ingestion process had to keep pace.

With the advent of various media formats, resolutions, and increasing file sizes, coupled with the need for real-time availability, the ingestion process has been propelled to a position of central importance. It’s the first crucial step in the media management workflow, setting the stage for encoding, storage, and delivery.

An efficient ingestion process is not just about accommodating large volumes of data; it’s also about ensuring data integrity, extracting and managing metadata, and converting files into a format suitable for subsequent processes. In this grand festival of media streaming, the ingestion process ensures that each performance is ready to go on stage at a moment’s notice, contributing to a seamless viewing experience for the audience. The role of ingestion in media management is thus both foundational and transformative. As we navigate the digital age, understanding its workings becomes increasingly vital.

Deep Dive into the Ingestion Process

Ingestion is the preliminary yet indispensable stage in the media processing pipeline. It encompasses the transfer of media files from a source, be it a camera, an external drive, or a network location, into the media processing system. This process is more than just a simple file copy operation; it’s an intricate dance that involves several crucial steps, each serving a distinct purpose.

- The first step is the actual transfer of the media files. We achieve this via various methods, including direct upload, FTP (File Transfer Protocol), or API-driven ingestion, depending on the scale and complexity of the media assets. This step is akin to bringing all the ingredients into the kitchen.

- Once we transfer the media files, they are typically converted into a mezzanine format suitable for further processing. This step, known as transmuxing or transcoding, is analogous to prepping the ingredients for cooking – chopping, marinating, etc. The mezzanine format is chosen to preserve the original media’s quality while being efficient for subsequent encoding and streaming.

- Simultaneously, metadata associated with the media files is extracted and managed. This process could include data such as the file’s codec, resolution, and duration, along with more complex metadata like closed captions, subtitles, and add markers. This metadata extraction is akin to noting down the recipe – it provides essential information about the media file that guides the subsequent stages in the media processing pipeline.

The Ingestion technology

Underlying the ingestion process are several technical components, each playing a crucial role. Let’s delve into some of these.

- File transfer protocols

- File transfer protocols are the workhorses powering the transfer of media files during ingestion. We use FTP and its secure variant SFTP (SSH File Transfer Protocol). However, more efficient protocols like Aspera or Signiant might be employed for large-scale media operations dealing with massive volumes of data. These protocols use advanced techniques such as UDP-based data transfer and multi-threading to achieve significantly higher transfer speeds than traditional FTP.

- Metadata extraction

- Metadata extraction is another critical aspect of ingestion. Metadata provides a wealth of information about the media file and can guide various stages of the media processing workflow. For example, the codec and resolution metadata can inform the encoding process, while captions and subtitle metadata are crucial for ensuring accessibility during playback. Metadata extraction involves parsing the media file headers and often requires a deep understanding of various media file formats.

- Integrity checks

- Integrity checks are the guardians of data quality during ingestion. Given the large size of media files and the potential for network errors during transfer, a file can get corrupted during the ingestion process. Integrity checks, typically implemented using checksum algorithms like MD5 or SHA-1, ensure that the ingested file is identical to the source file, thereby preserving data quality.

Case Study: YouTube’s Ingestion Strategy

YouTube, the world’s largest video-sharing platform, offers a fascinating case study of a robust and scalable ingestion strategy. With over 500 hours of video uploaded every minute, the platform needs an ingestion process to handle enormous amounts of data while ensuring quality and efficiency.

- Direct Upload and APIs

- YouTube supports direct file uploads from users, a straightforward and user-friendly method of ingestion. However, for large-scale operations, such as media companies or prolific content creators, YouTube offers Data API v3. This API allows developers to automate the upload process, providing efficiency and scalability. The API-driven ingestion is akin to having a conveyor belt system in a factory, enabling the continuous, automated transfer of goods.

- Supported Formats and Transcoding

- YouTube uses a flexible ingestion strategy to handle the vast array of video formats and codecs uploaded by users. It accepts various video formats – from MOV and MP4 to AVI and WMV. Once uploaded, YouTube’s engine transcodes these files into a standard format for further processing. This approach is similar to translating various languages into a universal one, ensuring smooth communication.

- Metadata Extraction

- YouTube’s ingestion process also involves robust metadata extraction. When a video is uploaded, YouTube extracts and stores relevant metadata such as title, description, and tags. It also supports more complex metadata like subtitles and closed captions, ensuring accessibility. This process is akin to a librarian categorizing and tagging a new book, making it easily discoverable for future readers.

- Integrity Checks

- Given the volume of uploads, ensuring data integrity is crucial for YouTube. As part of its ingestion process, YouTube performs integrity checks on uploaded videos, ensuring they haven’t been corrupted during the upload process. These integrity checks act as the quality control inspectors in the factory, ensuring the final product is in perfect condition.

- Scalability

- The most impressive aspect of YouTube’s ingestion process is its scalability. Despite the massive volume of uploads, YouTube’s ingestion system rarely buckles under the load. This is due to its highly distributed architecture, which can scale horizontally to accommodate increasing demand. This scalability is akin to a factory that can quickly add more assembly lines when the demand increases.

In conclusion, YouTube’s ingestion strategy is a testament to the platform’s success. Combining a versatile API-driven approach, robust transcoding and metadata extraction processes, thorough integrity checks, and scalable architecture allows YouTube to manage a staggering volume of video uploads efficiently, setting the stage for seamless encoding and streaming.

Advanced Ingestion Techniques

As digital media continues to burgeon, the ingestion process must evolve to efficiently handle the increasing data volumes. Two cutting-edge techniques in media ingestion that have shown promise in speed, scalability, and reliability are parallel processing and cloud-based ingestion.

Parallel Processing

Parallel processing is a technique that involves breaking down the ingestion tasks into smaller, independent functions that can be executed concurrently across multiple processors or cores. This strategy leverages the architectural benefits of modern multi-core systems to expedite the ingestion process, vastly improving the throughput.

In a high-load environment where we continuously ingest large volumes of media files, parallel processing mitigates bottlenecks by distributing the load, reducing the overall time for ingestion. It also enhances the system’s ability to handle spikes in ingestion requests, improving the robustness of the overall workflow.

Cloud-Based Ingestion

Cloud-based ingestion leverages the elasticity and distributed nature of the cloud to streamline the media management process. This strategy provides a scalable solution to handle growing media libraries without physical infrastructure constraints.

Cloud-based systems inherently offer geo-replication and data redundancy, ensuring data durability and availability despite server failures or data center outages. The distributed nature of the cloud also enables geo-distributed ingestion, allowing media files to be uploaded closer to their point of origin, thereby reducing latency and improving ingestion speeds.

Moreover, cloud-based ingestion can integrate seamlessly with other cloud services, such as AI and machine learning models for automated metadata extraction, content moderation, or advanced encoding methods, adding more value to the ingestion process.

Real-world Applications of Ingestion Techniques

Twitch

Twitch, a live-streaming platform, faces unique challenges with ingestion due to the real-time nature of its content. To handle this, Twitch employs a distributed ingestion network with multiple points of presence (PoPs) across the globe.

Twitch’s ingestion system automatically selects the optimal PoP based on network latency and PoP load when a user starts streaming, ensuring a smooth and fast ingestion process. Once the video platform ingests the stream, it quickly transcodes it into multiple formats and bitrates for distribution to viewers.

Twitch also uses parallel processing to handle the real-time transcoding task. It divides the incoming live stream into short segments, transcoding concurrently, minimizing latency, and enabling near real-time delivery to viewers.

Moreover, Twitch leverages AWS’s cloud infrastructure for its ingestion process, benefiting from AWS’s scalability, reliability, and global reach. This cloud-based approach allows Twitch to handle the massive and continuous influx of live streams effectively.

In conclusion, real-world applications of advanced ingestion make techniques underscore their pivotal role in managing diverse and growing media content. Streaming platforms like YouTube and Twitch exemplify how leveraging technologies like parallel processing and cloud-based ingestion can lead to highly efficient and scalable ingestion workflows. As digital media continues to proliferate, embracing these advanced techniques is no longer an option but a necessity for any media platform.

Challenges in Ingestion

Media ingestion, while a crucial step in the streaming workflow, presents several challenges that need to be handled meticulously by engineers and system architects

.

Large File Sizes and Transfer Speeds

Bandwidth is essential but no longer the only solution to large media files.

As media quality continues to improve, with 4K and 8K content becoming commonplace, the file sizes associated with these media assets have ballooned. Transferring these large files quickly and efficiently is a challenge that needs to be addressed. Techniques such as multipart upload, where a file is divided into smaller parts and uploaded in parallel, and high-speed data transfer protocols like Aspera or Signiant help mitigate this issue.

File Integrity

Ensuring the integrity of files during the transfer process is paramount. Errors during transmission can lead to corrupted files, disrupting the streaming experience. Checksum verification is a standard method used to tackle this problem, where a unique identifier is generated for the original file and compared to the identifier of the received file. Any discrepancy indicates an error in transmission, triggering retransmission.

Metadata Extraction and Management

Metadata, the information about the media file, such as title, duration, format, and other details, is crucial for organizing and managing media assets. Extracting this metadata accurately and consistently can be challenging, especially given the diverse media formats. Automated metadata extraction tools, often leveraging AI and machine learning, are being utilized to address this issue. These tools can handle a wide array of formats and extract detailed metadata, improving the efficiency and accuracy of the process.

Scalability

As streaming platforms grow, so does the media volume they must ingest. Ingestion systems need to scale to handle this increasing load without a drop in performance. Cloud-based ingestion and distributed processing techniques are becoming increasingly popular solutions to this problem, providing the scalability necessary to keep up with growing demand.

Conclusion

In wrapping up, I want to personally express how crucial media ingestion is in the larger framework of media management. As we’ve explored, the ability to handle large file transfers, meticulously manage metadata, and employ advanced techniques like parallel processing and cloud-based ingestion isn’t just impressive – it’s vital for the future of media streaming. This deep dive has helped elucidate some of the complexities in this fascinating field. In today’s vast ocean of information, Bianor aims to help you make sense of these advanced concepts. Don’t hesitate to contact us and discuss any video streaming-related topic.

Video Streaming Lifecycle

Download Bianor’s white paper to learn more about the five most crucial components of video streaming lifecycle.